-

2. 딥러닝 Basic & MLPAI/2주차 2021. 8. 9. 13:50

Key Components of DL

- Data

- Model

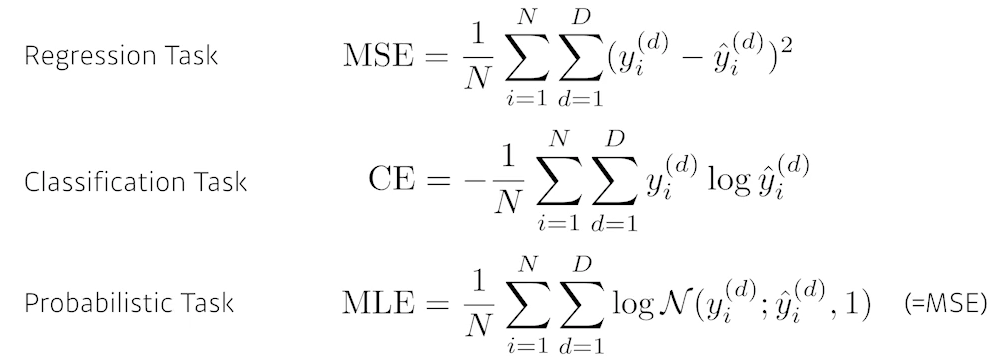

- Loss Function

- Algorithm

딥러닝 역사

- AlexNet (2012)

- DQN (2013)

- Encoder/Decoder, Adam (2014)

- GAN, ResNet (2015)

- Transformer (2017)

- Bert (2018)

- Big Language Models(GPT-X) (2019)

- Self-Supervised Learning (2020) - SimCLR

A Simple Framework for Contrastive Learning of Visual Representations

This paper presents SimCLR: a simple framework for contrastive learning of visual representations. We simplify recently proposed contrastive self-supervised learning algorithms without requiring specialized architectures or a memory bank. In order to under

arxiv.org

- Universial Approximation Theorem: There is a single hidden layer feedforward network that apporxiamtes any measureable function to any desired degree of accuracy on some compact set K

- Affine transformation is a linear mapping method that preserves points, straight lines, and planes. Sets of parallel lines remain parallel after an affine transformation.

- MLP는 affine transformation과 nonlinear transformatoin들을 결합하여 구성한다.

MLP 구현

- kaiming_normal_

Tensor torch::nn::init::kaiming_normal_(Tensor tensor, double a = 0, FanModeType mode = torch::kFanIn, NonlinearityType nonlinearity = torch::kLeakyReLU)

Fills the input Tensor with values according to the method described in “Delving deep into rectifiers: Surpassing human-level performance on ImageNet classification” - He, K.et al. (2015), using a normal distribution. Also known as He initialization. No gradient will be recorded for this operation.

- view

텐서를 재배열

https://pytorch.org/docs/stable/generated/torch.Tensor.view.html

torch.Tensor.view — PyTorch 1.9.0 documentation

Shortcuts

pytorch.org

- no_grad & eval

https://coffeedjimmy.github.io/pytorch/2019/11/05/pytorch_nograd_vs_train_eval/

Pytorch에서 no_grad()와 eval()의 정확한 차이는 무엇일까?

.

coffeedjimmy.github.io

'AI > 2주차' 카테고리의 다른 글

6. Generative Models (2) 2021.08.13 5. RNN (0) 2021.08.12 4. Convolution (0) 2021.08.11 3. Optimization (0) 2021.08.10 1. 데이터 시각화 (0) 2021.08.09